Realtime Surf Condition Detection

LIVE Realtime Youtube Feed

LINKBreakzone Detection

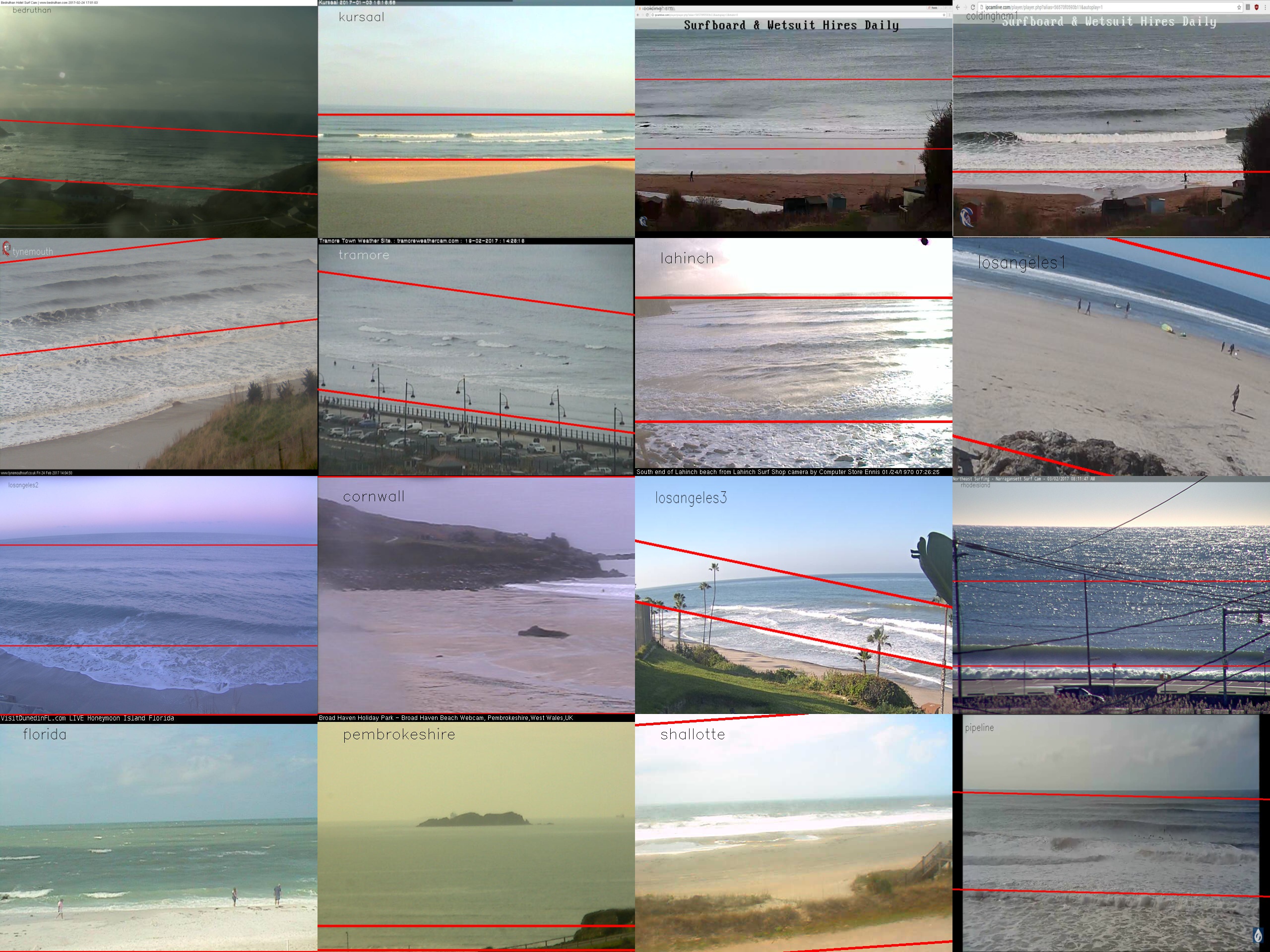

First I built an algorithm to draw a boundary around the surf using the cross correlation of movement from the top to the bottom of the image.

Checking for Surf

Using custom convolutions and houghlines I built a basic algorithm to check if lines of surf were visible on any given webcam. I filtered these houghlines based on the following conditions. |

|

| No Surf | Surf |

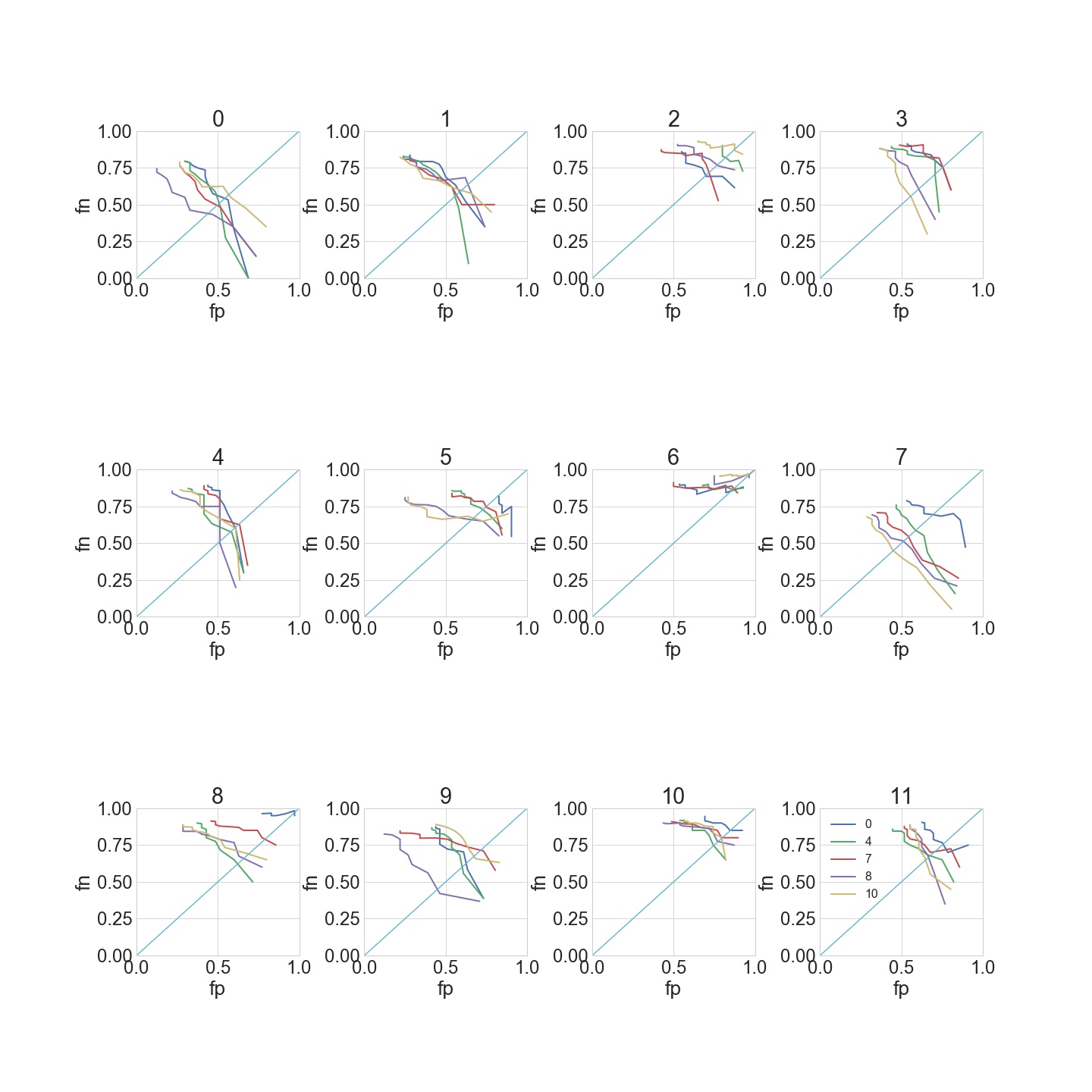

A range of convolutions yielded the following results on the grid of test webcams.

Measuring the Surf with ML

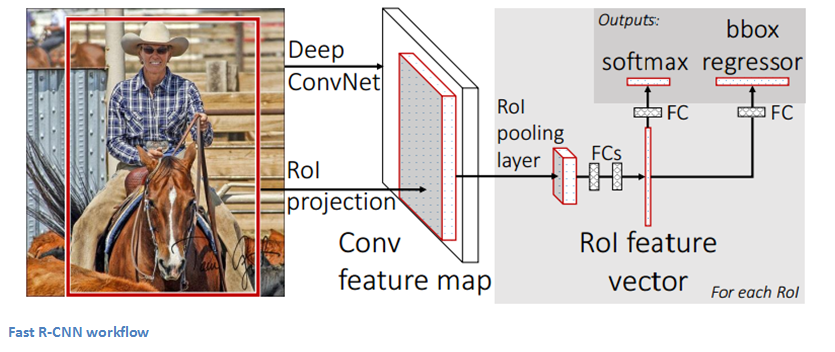

Using a regional convolutional neural network I trained a detection algorithm for two features of a breaking wave. The first was trained to recognise the the wave peak and the second the pocket of the wave as its breaks. The purpose of R-CNNs is to solve the problem of object detection. Given a certain image, we want to be able to draw bounding boxes over all of the objects. The process can be split into two general components, the region proposal step and the classification step. Selective Search is used in particular for RCNN. Selective Search performs the function of generating 2000 different regions that have the highest probability of containing an object. After we've come up with a set of region proposals, these proposals are then “warped” into an image size that can be fed into a trained CNN that extracts a feature vector for each region.

This vector is then used as the input to a set of linear SVMs that are trained for each class and output a classification. The vector also gets fed into a bounding box regressor to obtain the most accurate coordinates. Non-maxima suppression is then used to suppress bounding boxes that have a significant overlap with each other. The speed of the whole process is increased by sharing computation of the conv layers between different proposals and swapping the order of generating region proposals and running the CNN. In this model, the image is first fed through a ConvNet, features of the region proposals are obtained from the last feature map of the ConvNet and lastly we have our fully connected layers as well as our regression and classification heads.

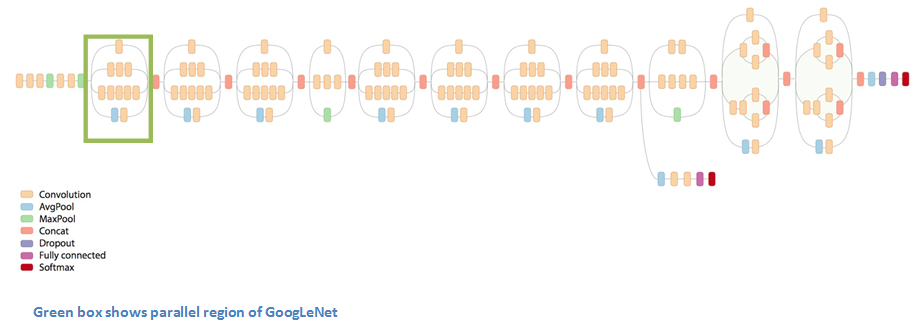

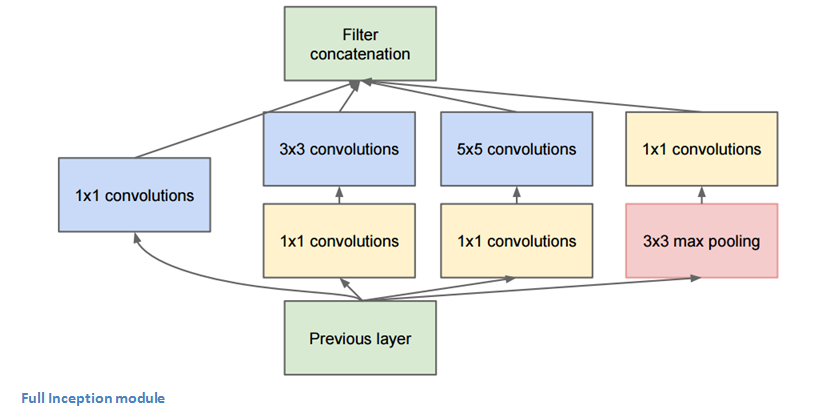

I used the GoogLeNet layer structure for the convNet step. GoogleNets layout utilises parallel computation using Inception Modules, the entire layout is shown bellow along with a blown up inception module.

The bottom green box is our input and the top one is the output of the model. Basically, at each layer of a traditional ConvNet, you have to make a choice of whether to have a pooling operation or a conv operation. What an Inception module allows you to do is perform all of these operations in parallel. This allows for faster forward propogartion when detecting waves. It consists of a network in a network layer, a medium sized filter convolution, a large sized filter convolution, and a pooling operation. The network in network conv is able to extract information about the very fine grain details in the volume, while the 5x5 filter is able to cover a large receptive field of the input, and thus able to extract its information as well. You also have a pooling operation that helps to reduce spatial sizes and combat overfitting. On top of all of that, you have ReLUs after each conv layer, which help improve the nonlinearity of the network.

Cameras Included In the Study:

- Test Data 1

- Test Data 2

- Test Data 3

- Huntington Beach Pier, California

- Topanga Close-up, California

- The Point at San Onofre, California

- Old Mans at San Onofre, California

- Oceanside Pier, Northside, California

- Swamis, California

- Pipes, California

- Cardiff Reef Overview, California

- Gas Chambers, Oahu

- Pipeline, Oahu

- Chun's, Oahu

- Laniakea, Oahu

- Inside Laniakea, Oahu

- Waikiki Beach, Oahu

- Padang Padang, Indonesia

- Balangan, Indonesia

- Keramas, Indonesia

- Santana, Nicaragua

- Santana, Nicaragua

- Portreath, England

- Guethary - Parlementia, France